The Notebook: A plea for a little more nuance in AI discourse

Where the City’s thinkers get a few things off their chest. Today, Marc Warner, CEO at AI firm Faculty, takes the Notebook pen.

City bosses need nuance to reap AI’s rewards

People are increasingly prone to veer between extreme ends of a spectrum, as we’ll see in America this November. Unfortunately, this trait is also evident in the AI debate.

Depending on who you ask, AI may be a panacea or a malign force threatening humanity. You might expect the CEO of an AI company to argue the former – but both labels are unhelpful. So, this is a plea for nuance.

AI is a very broad term. No one says “biology” or “physics” is unequivocally “good” or “bad”. Let’s appreciate that not all AI is the same.

General AI aims to be a universal problem solver, like humans. We don’t currently understand how to build this or how we might control it. At some point, it could surpass human capabilities on a large range of tasks. Extreme caution – and potentially regulation – should therefore be applied. But hopefully that’s some way off in the future.

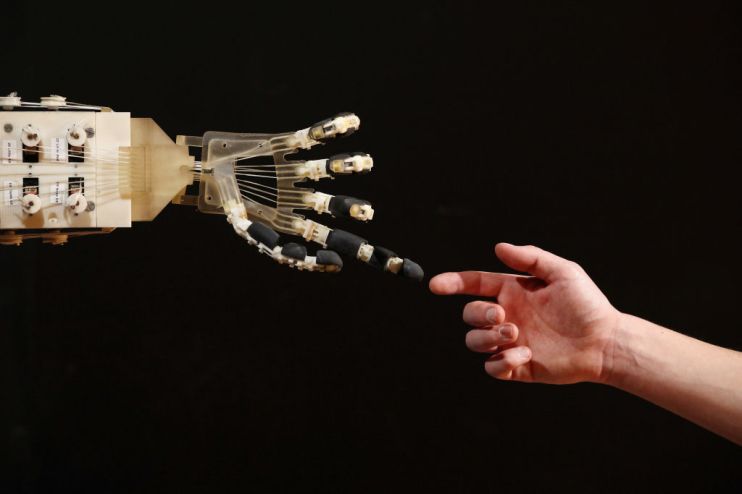

But, we can safely embrace narrow AI as fast as possible. Narrow AI solves specific problems and has been used successfully for decades. It helps radiographers read mammograms, law enforcement remove terrorist propaganda and businesses sell more products. Well implemented, it will transform the back offices of all the financial institutions of the City, and we can’t afford to miss out.

So we must think carefully about implementation of narrow AI, including GenAI.

And while ChatGPT may have placed generative AI on most people’s radar, it’s actually been around much longer. We’ve been implementing GenAI in organisations since 2018, and have learnt a few lessons about how to do that well.

First, AI solutions must be designed to serve business outcomes. If you treat this as a technology looking for a problem, you’ll waste a lot of time and money. Second, your AI should be human-centric. It should support you, not supplant you. Finally, you should make the transformation manageable by executing it as a series of individually valuable steps, that sum to something that is collectively transformative.

The AI era is here, so let’s have a sensible debate about reaping the rewards whilst managing the risks. Less hyperbole, more nuance please.

How AI could save lives

Recently, my cofounder joined the board of Target Ovarian Cancer, the UK’s leading ovarian cancer charity, as a trustee. I was shocked to learn that one in seven women die within two months of an ovarian cancer diagnosis – purely because the discovery comes too late. It is a dire situation. Lots must be done to address this, including better use of AI and technology to help with earlier identification. I’m hopeful to see progress in this area, and we’ll look to take any learnings and use them to help other charities too.

Crossing that bridge

On the surface, bridges and AI have little in common. But, as Stuart Russell, professor of computer science at the University of California once said, “when engineers design bridges, there’s not a bunch of designers and then a bunch of ethicists saying ‘By the way, make sure it doesn’t fall over.’ Of course it’s not supposed to fall over; it wouldn’t be a bridge if it just fell over.”

We need to approach AI similarly – with utility and safety on an equal footing.

Back straight, head up

At the suggestion of a coach, I recently started the Alexander technique, a practice which focuses on improved posture and movement. It’s normally the preserve of performers; it forms part of the curriculum at RADA and the Royal Schools of Music. Yet Alexander has been overlooked by businesses. At a basic level, with all the time we spend hunched over computers, its original formulation as a postural correction technique is helpful. But, viewed more broadly, it’s also an interesting way to help manage the ups and downs of startup (and corporate) life. I’ve been working with Anthony Kingsley, and I can’t recommend him highly enough.

Quote of the week

“Recognize reality even when you don’t like it.”

Charlie Munger

As a hero of mine, I was sad to see Charlie Munger passed recently. There are so many great ‘Mungerisms’, but this is one that rings true to our values at Faculty.

What I’ve been listening to

I’ve recently been enjoying the Art of Accomplishment podcast. This is a slightly strange beast – it’s probably best categorised as executive coaching, but like nothing I’d ever heard before, and all the more interesting because of it. It’s a blend of traditional wisdom and modern neuroscience, packaged up for silicon valley CEOs. And yet both my mum, a former PE teacher, and I find it interesting and helpful. Definitely worth a listen, and start from the very beginning!