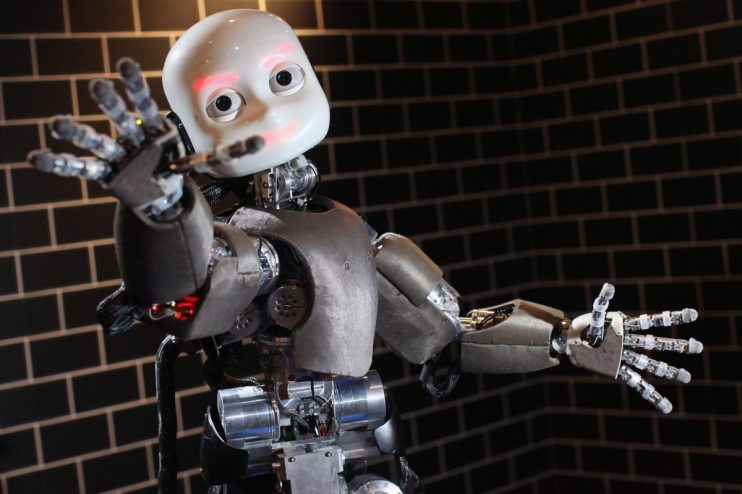

DEBATE: Is the EU’s approach to regulating artificial intelligence misguided?

Is the EU’s approach to regulating artificial intelligence misguided?

James Lawson, chief AI evangelist at DataRobot, says YES.

Artificial intelligence (AI) is transforming society for the better. We’re using it to improve services and save lives. Applications range from fighting fraud to preventing forest fires and creating new medicines.

The EU’s desire to be the world leader in AI and to protect citizens is laudable, but its regulatory stance risks having the opposite effect.

The rules seek to restrict all “high risk” applications, but this is poorly defined. A precautionary approach is in tension with EU leadership ambitions, and could fail to consider the costs of inaction.

Too much caution could also drive entrepreneurs and talent abroad. By comparison, the US says that regulators must not “needlessly hamper” AI, considering benefits and costs simultaneously. This is a more even-handed approach, providing fertile ground for innovation.

Today, none of the top 10 global tech companies are European. It’s a safe bet that, with misguided regulation, this trend will continue into the AI era.

Roch Glowacki, associate at international law firm Reed Smith, says NO.

Developing AI regulation is a complex task with high stakes, given the technology’s potential to fundamentally affect all aspects of our lives. While many have attacked the EU’s approach, much of this is unfair, misguided, and fails to appreciate the very delicate line that policymakers must tread here.

Simply put, the EU wants to inspire public trust and ensure that data flowing through the economy (including, for example, our financial services and healthcare industries) is legitimate, high quality, and accessible where appropriate.

In a similar way that foods must disclose GMOs or allergens, AI-based services could be required to label that an algorithm, instead of a human, took certain high-stakes decisions or actions.

The EU does not want to stifle innovation or prohibit the use of “high risk” AI. Instead, the aim is to avoid internal market fragmentation, to promote open data initiatives, to establish a risk-based approach to AI governance, and to develop a framework for “trustworthy” AI, so that it becomes an asset that may be exported across the world.

Main image credit: Getty