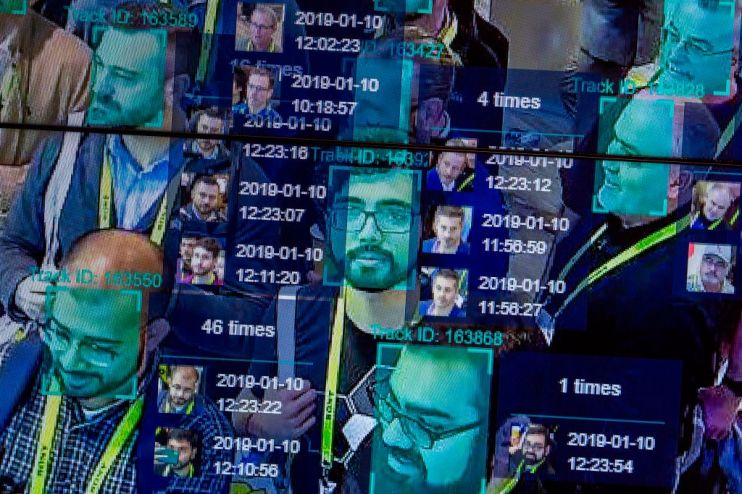

With its foray into facial recognition, Uber is normalising mass surveillance

The news late last year that Uber plans to introduce facial recognition technology seems to have mostly escaped the notice of the public, buried as the story was beneath five or six paragraphs in most of the reports on the loss of the firm’s London licence.

Or perhaps, as one depressing Wall Street Journal headline claimed, we Brits are simply “used to surveillance”. The British Security Industry Association tells us that, in 2013, there was one surveillance camera in Britain for every 10 people. The number of innocuous little boxes peeking out at us from street corners has risen by some four million since.

But used to it or not, we should be concerned about Uber’s plans.

The ride-hailing firm is ostensibly trying to ensure the safety of its passengers, after it was revealed that more than 14,000 trips had been taken in London with drivers who had faked their identity. Yet the only reason this was possible was because Uber had failed to close a loophole in its own system — one that allowed unverified drivers to upload their photos to other drivers’ accounts.

Uber, then, is making up for its own blunder by subjecting its drivers, many of whom are financially insecure and lack alternative employment options, to an oppressive kind of technology, not only forcing them to give over sensitive biometric data, but normalising an increasingly intrusive form of surveillance.

This sort of trend is hard to reverse once it gets going. It also emboldens the authorities, who continue to “pilot” facial recognition in what equates in reality to operational use.

This was behind the legal complaint made last year by Ed Bridges. According to Bridges, his face had been scanned by a security camera while he was doing the Christmas shopping in 2017, and then again while he was attending a (peaceful) protest the following year. He argued that South Wales Police breached his rights when facial recognition software identified him in the footage.

Bridges lost his case but is now appealing. What’s more, on the day of the ruling, London mayor Sadiq Khan admitted that the Met Police had cheerfully been using this kind of software at a property development in King’s Cross, and that the images had been shared with the private company concerned. (Khan later wrote to the owner of the development to express his concerns.)

And we can be sure that use of this kind of technology will only increase over time.

Even if you deploy the term “national security” in its most expansive and euphemistic sense, such a warrantless incursion on individual liberty would hardly be justified. It is also dangerously inaccurate.

Professor Paul Wiles, the biometric commissioner, has called police use of facial recognition thus far “chaotic”, while Timnit Gebru, technical co-lead of Google’s Ethical Artificial Intelligence Team, told a summit in Geneva in May that “there are huge error rates [in identification] by skin type and gender”.

Big Brother Watch uncovered through a Freedom of Information request that the Met Police’s facial recognition matches were 98 per cent inaccurate, and misidentified 95 people at the Notting Hill Carnival.

Citing its imperfections as well as the threat it poses to civil liberties, San Francisco banned the use of facial recognition by transport and law enforcement agencies over summer. Portland is currently considering the most comprehensive ban of the technology yet proposed in the US.

The UK may lose the chance to follow suit, or at the very least to shape the use of this kind of technology, if we fail to offer any resistance to its proliferation.

Facial recognition is a uniquely dangerous and innately oppressive technology. We should be very concerned about companies like Uber normalising its use, and suspicious of claims that it is for our own safety.

Main image credit: Getty