20 times that AI has tried to make it in the music business

Artificial intelligence (AI) is becoming omnipresent across every segment of cultural and economic activity. But this phenomenon is not new – AI has been trying to carve a name for itself in the music business as a collaborator, composer, artist and lyricist since 1951…

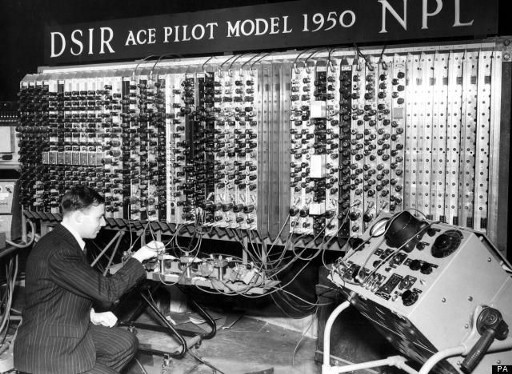

1) The first computer generated music (1951)

In 1951 British mathematician Alan Turing created computer generated music for the first time in the history. Turing’s machine at the Computing Machine Laboratory in Manchester was used to generate melodies filled much of the lab’s ground floor, and was built by Turing himself. Several melodies were created, including “God Save the King” and “Baa, Baa Black Sheep”.

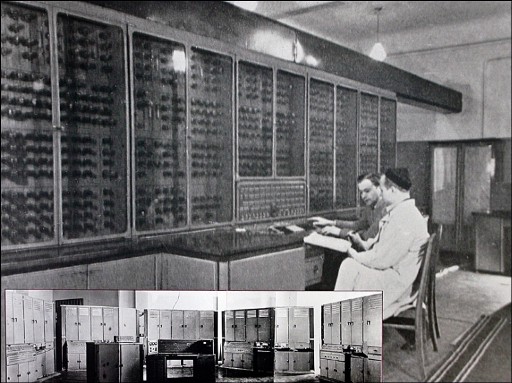

2) The first musical work written by a computer (1957)

Composer Lejaren Hiller and mathematician Leonard Isaacson programmed the ILLIAC I (Illinois Automatic Computer) to generate compositional material and to create the musical work that was completely written by AI, resulting in the piece ‘Illiac Suite for String Quartet‘.

3) The first algorithmic composition (1960)

Russian researcher R.Kh.Zaripov published the first ever paper on algorithmic music composing using the URAL-1 computer. The paper was titled “An algorithmic description of a process of musical composition”.

4) Computer-generated piano music (1965)

American Inventor Ray Kurzweil premiered a piano piece that was created by a computer which was capable of pattern recognition in various musical compositions. The computer was then able to analyze and use these patterns to compose and create new melodies.

5) MIT Experimental Music Studios (1973)

The MIT Experimental Music Studio (EMS) was founded in 1973 by Professor Barry Vercoe and became the first facility in the world to have digital computers dedicated to full time research and making of computer music. EMS developed and researched many important computer music technologies: real-time digital synthesis, live keyboard input, graphical score editing, programming languages for music composition and synthesis.

6) The first International Computer Music Conference (1974)

The first International Computer Music Conference was held in Michigan State University in 1976. The International Computer Music Conference (ICMC) is now a yearly international conference for computer music researchers and composers.

7) Machine perception of musical rhythm (1975)

In 1975 researchers from the MIT Experimental Music Studio published a paper “Machine perception of musical rhythm” and developed a system for intelligent music perception that enables a musician to play fluently on an acoustic keyboard while the machine infers and registers a meter, its tempo, and note duration.

8) The EMI breakthrough (1980)

Experiments in Musical Intelligence (EMI) was a major breakthrough in 1980. The EMI was based on generative models to analyse existing music and create new pieces based on them. By analysing different music works, EMI could generate unique structured musical compositions within the framework of specific music genre. EMI has since created thousands of different works based on the works of countless composers representing many different musical styles.

9) Sony Computer Science Laboratories (1988)

The Sony Computer Science Laboratories (Sony CSL) were founded for the sole purpose of conducting research relating to computer science. Later the Sony Computer Science Laboratories came to be considered as one of the milestones for using AI in music research.

10) David Bowie and ‘Verbasizer’ (1995)

Musician David Bowie helped computer programmer Ty Roberts to develop an app called the “Verbasizer” in 1995. Verbasizer took literary source material and randomly reordered the words to create new combinations that could be used as lyrics in music. The results appeared on several David Bowie albums.

11) AI technology pervades music (1997)

The Music research team project at Sony Computer Science Laboratory Paris was founded by French composer and scientist François Pachet. In 1997 he started a research activity that focused on music and artificial intelligence. His team authored and pioneered many different technologies and filed more than 30 patents focused on the application of AI for electronic music distribution, audio feature extraction and music interaction.

12) AI performs live alongside musicians (2002)

The new “Continuator” algorithm was designed by researcher François Pachet. Continuator could learn and interactively play with musicians who were performing live. Uniquely, Continuator could continue to compose a piece of music from the place where the live-music stopped.

13) AI completes the feedback loop (2009)

A computer program called Emily Howell created the whole musical album titled — “From Darkness, Light”. Emily Howell is an interactive interface that registers and takes into account feedback from listeners, and builds its own musical compositions from a source database. The software is based on latent semantic analysis.

14) The first classical music composed by AI (2010)

Musical composition “Iamus’ Opus one” was created in 2010. It is the first fragment of professional contemporary classical music ever to be composed by a computer AI using its own unique style. The. most famous Iamus computer composition is titled “Hello World!”:

15) Computer Emily Howell releases ‘her’ second album (2012)

Computer program Emily Howell released her second music album titled “Breathless” in 2012.

16) Flow Machines creates “Daddy’s Car” (2016)

Researchers at Sony had been working on AI-generated music for years, but “Daddy’s Car” was the first time that Sony had released pop music composed by entirely AI. To write the song, AI software called “Flow Machines” drew from a large database of songs to compose new music, combining small elements of many tracks to create new compositions. “Daddy’s Car” was mostly based on The Beatles, but the further arrangement was done by live-musicians.

17) Alex Da Kid collaborates with IBM Watson (2016)

The IBM Watson AI supercomputer was used to create song “Not Easy” with artist Alex Da Kid. The song took the first place in the Top 40 charts in the USA. Watson analysed a huge number of articles, blogs and data taken from social media in order to formulate the most featured topics at the time and to characterise the overall emotional mood.

18) The ‘I Am AI’ Album (2017)

American Idol star Taryn Southern released her album called “I AM AI” which was composed entirely by an AI music composer tool developed by Amper Music. Amper created the basic music structures, and the rest of the work, including lyrics, were the work of Taryn Southern.

19) AI meets death metal – live (2019)

In 2019 Dadabots set up a non-stop 24/7 YouTube livestream channel playing heavy death metal music that is generated completely by AI algorithms. To train neural networks using machine learning developers used the songs of the Canadian metal band “Archspire”, which are notable for their fast and aggressive tempo. As a result, the algorithm learned to impose speed drums, guitar, and vocals so that the result sounded like real death metal music.

20) AI creates weather-dependent music (2019)

Icelandic singer and songwriter Björk has collaborated with Microsoft to create AI-generated music called “Kórsafn”, which is based on the changing weather patterns and the position of the sun. Kórsafn uses sounds from Björk’s 17 years of musical archives to create new arrangements. Using Microsoft’s AI, the music is adapting to sunrises, sunsets, and changes in barometric pressure, using a camera live feed from the roof of. hotel where it plays on loop. The result is an endless string of new variations that creates weather associated mood for hotel guests.